11.6.2012, pesco

tags: datalang langsec hammer code

Inspired by their talk

The Science of Insecurity

I took Meredith Patterson and Sergey Bratus by their word and

tried to solve my next network communication problem

without crossing the line beyond deterministic context-free

languages.

The upshot of said talk was that most if not all security problems

stem from the fact that some software component could not

foresee the consequences of its input.

From a language-theoretic point of view,

the problem boils down

to

recognizing the set (

language

) of acceptable inputs.

There are different classes of languages

whose recognizers require increasingly complex mechanisms.

Things are basically pleasant with

regular languages and one step up,

aforementioned

deterministic context-free ones.

Up to this point we can algorithmically decide

whether two specifications describe the same language;

whether two peers in the network are cleanly interoperable.

When I was looking for a good

data serialization format,

in addition to my original requirements,

I went looking for one that had a deterministic context-free grammar.

Incidentally, one of the things I wanted to be able to do

was efficiently transfer relatively large blocks of arbitrary data.

Unfortunately, what immediately catapults you into the

land of (mildly) context-

sensitive languages are length fields.

JSON (as implied by the title) would have been my favorite choice,

but the best way to put binary blobs in it is

by encoding them as Base64-encoded strings.

{ "message": "Hi, Bob!"

, "attachment": "ZGFmdXFpc3RoaXM="

}

For one thing, this means overhead in encoding time, decoding time and data volume.

Also it is unsatisfying because one property of JSON is

self-descriptiveness:

recognizing a JSON value reveals its type.

Base64 blobs would be hidden in strings and force the recipient

to know exactly where to expect them.

A short note about overhead and efficiency concerns.

It is generally rightfully considered foolish to optimize prematurely.

From

most standpoints, computers are fast, bandwidth is cheap

and you are probably wasting ten times as much elsewhere

as avoiding Base64 would ever save.

Nevertheless, optimizing for efficiency is not useless

and in the right place, a constant factor can make all the difference.

Base64

will turn your 3GB download into a 4GB one.

More importantly,

I am treating this endeavor as much as an academic as a practical one,

asking

could we

as much as

do we want to

.

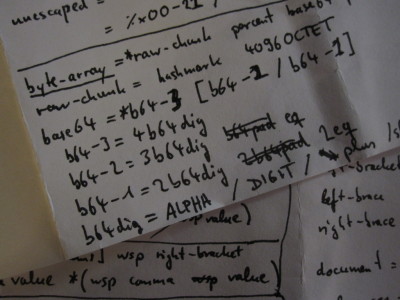

So below is the answer I came up with.

The idea is to break binary data into chunks of uniform size.

I chose 4096 bytes rather arbitrarily.

Allow one final chunk of variable length and encode that one in Base64.

So every 4kB, there is one character (

#)

which means

another 4k coming

.

There need not be any such

raw chunks

;

they are always followed by exactly one

(possibly empty) Base64 string enclosed in

%.

Examples:

#.....#.....%ZGFmdXFp%

#.....#.....%%

%ZGFmdXFp%

This syntax is added to JSON, along with a few other extensions.

Design goals

- Stay deterministic context-free.

- Avoid escaping or re-encoding every byte in binary blobs.

- Simple grammar.

- Self-describing structure.

- Stay reasonably human-readable and human-writable.

- Minimize attack surface for bugs.

- Plus: Allow exact representation of binary floating point numbers.

- Plus: Allow strings to use any character encoding.

- Plus: Do not use newlines as syntax, allow arbitrary values to be serialized

into single lines.

- Plus: Provide for easy streaming of values.

Non-goals

- Optimal size.

- Optimal speed.

Notwithstanding the goal to support efficiency with large blobs,

this format is not meant to squeeze every last bit out of everything.

That conflicts with self-descriptiveness and is what

Protocol Buffers

are for.

Characteristics

- Proper superset of UTF-8-encoded JSON.

- Types:

- Null

- Boolean

- Number

- Byte-Array

- String

- List (= JSON

arrays

)

- Record (= JSON

objects

)

- Defined in ABNF.

- ~150 lines.

- Transcribes trivially to a PEG.

- All syntax is ASCII.

- No other external encodings allowed.

- Strings are tagged with their encoding.

- No tag means UTF-8.

- JSON-style unicode escapes \u.... supported for compatibility.

- UTF-16 surrogate pairs recognized by grammar.

- Parsers SHOULD properly recode these for UTF-8 strings.

- Arbitrary bytes in strings via hexadecimal escapes \x...

- Numbers are arbitrary-precision rationals.

- Hexadecimal notation for numbers supported.

- Including hexadecimal fraction and exponent notation.

- Top-level

document

consists of one value of any type.

- JSON only allows arrays and objects.

- Defined syntax for top-level value streams.

- Values terminated by newlines.

- Allows parser for rule stream-element to

simply be called repeatedly on input stream.

Example

{ "null": null

, "boolean": true

, "integer": 1234

, "rational": 1234.56

, "exponent": 1234.56e2

, "hexadecimal": 0x123AB.CDxE

, "bytes": %ZGFmdXFp%

, "string": "Hello"

, "encoding": "Mot\xF6rhead"_latin1

, "list": [23,"skidoo"]

, "record": {}

}

Show me the code!

Glad you asked!

The child currently carries the rather stupid working title

datalang

and resides in a

repository here:

darcs get http://code.khjk.org/datalang/

Included is the

ABNF grammar

as well as a

demo parser

implemented in C using

hammer.

Oh right,

hammer.

Given that this post has already turned into a novel,

I am going to save that for later.

PS:

If anyone thinks of a better name than

datalang,

your suggestion is very welcome at my easily-guessed email address.